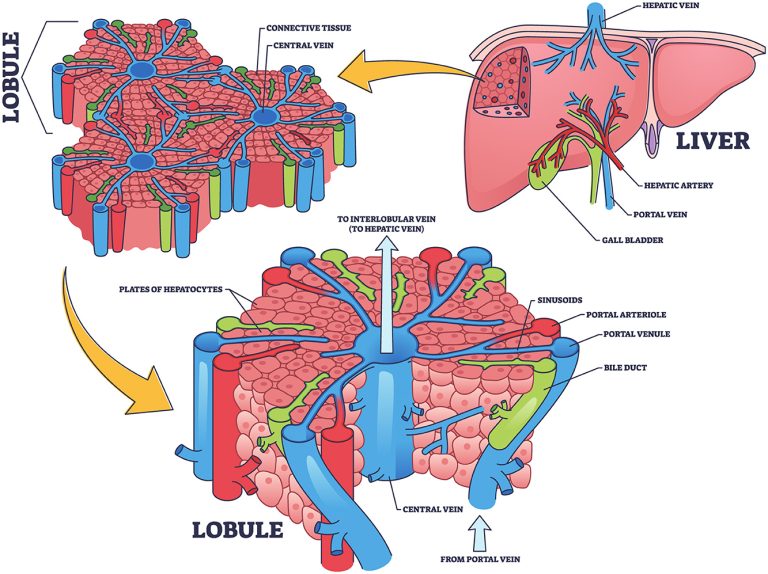

Artificial liver classifier: a new alternative to conventional machine learning models

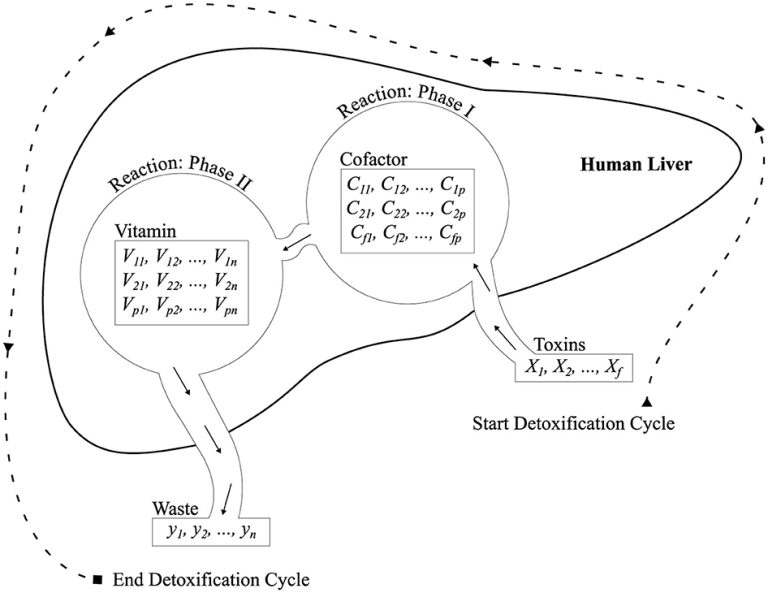

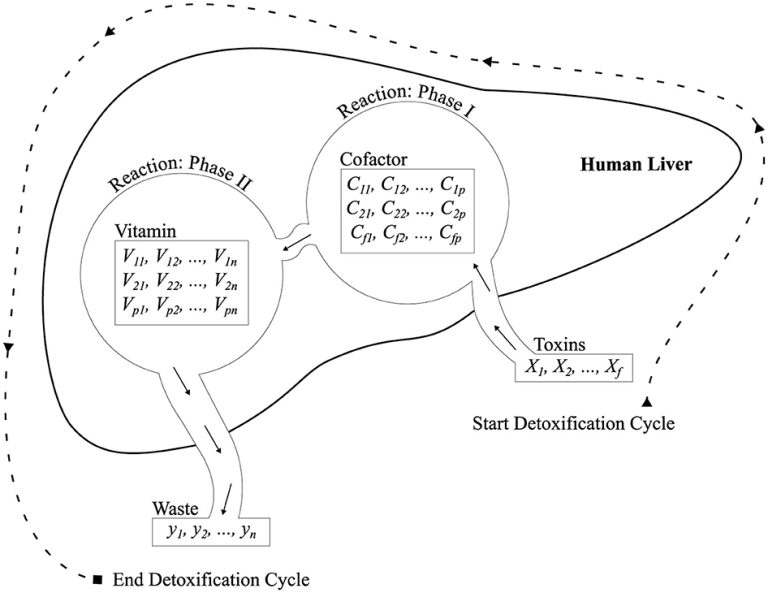

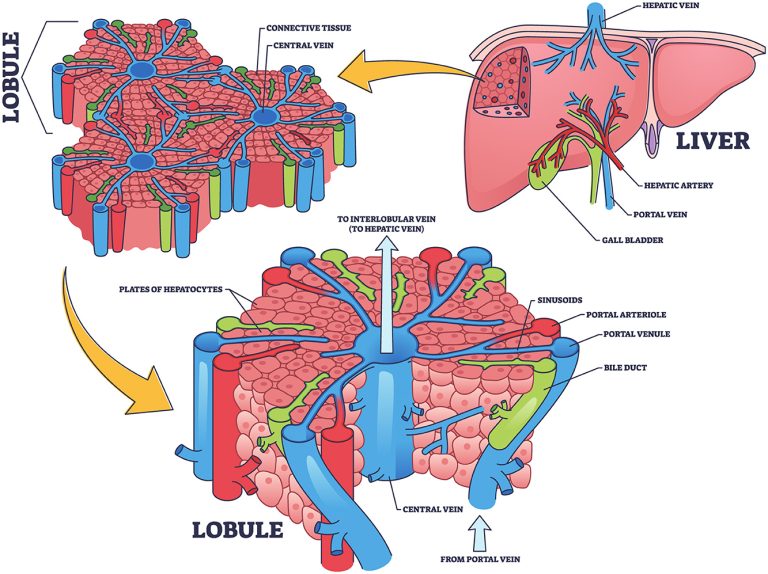

The paper presents the Artificial Liver Classifier (ALC), a simple and fast biologically inspired model optimized with the Improved FOX algorithm. Tested on five benchmark datasets, ALC achieved up to 100% accuracy on Iris and 99.12% on Breast Cancer, outperforming several traditional classifiers. It also showed smaller generalization gaps and lower loss, highlighting the potential of biologically inspired methods for efficient machine learning.

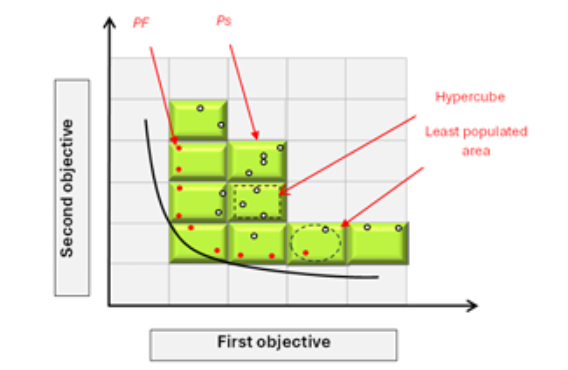

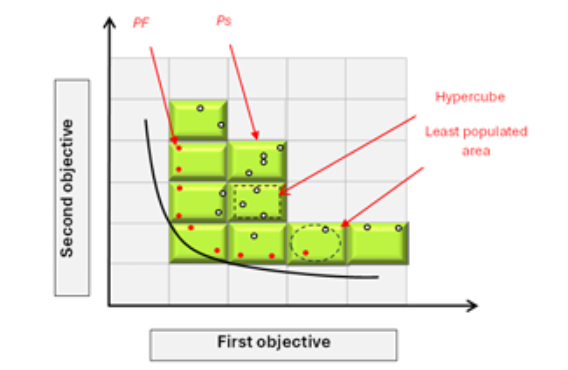

This project presents the Multi-Objective Ant Nesting Algorithm (MOANA), a novel extension of the Ant Nesting Algorithm (ANA), specifically designed to address multi-objective optimization problems (MOPs). MOANA incorporates adaptive mechanisms, such as deposition weight parameters, to balance exploration and exploitation, while a polynomial mutation strategy ensures diverse and high-quality solutions. The algorithm is evaluated on standard benchmark datasets, including ZDT functions and the IEEE Congress on Evolutionary Computation (CEC) 2019 multi-modal benchmarks. Comparative analysis against state-of-the-art algorithms like MOPSO, MOFDO, MODA, and NSGA-III demonstrates MOANA's superior performance in terms of convergence speed and Pareto front coverage.

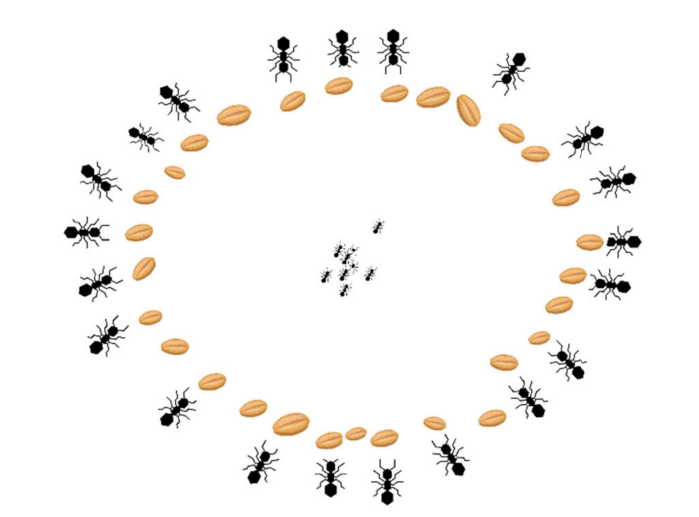

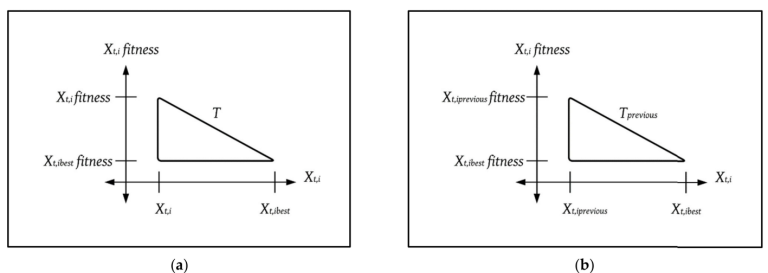

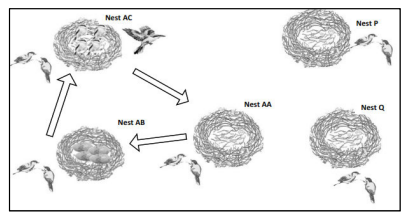

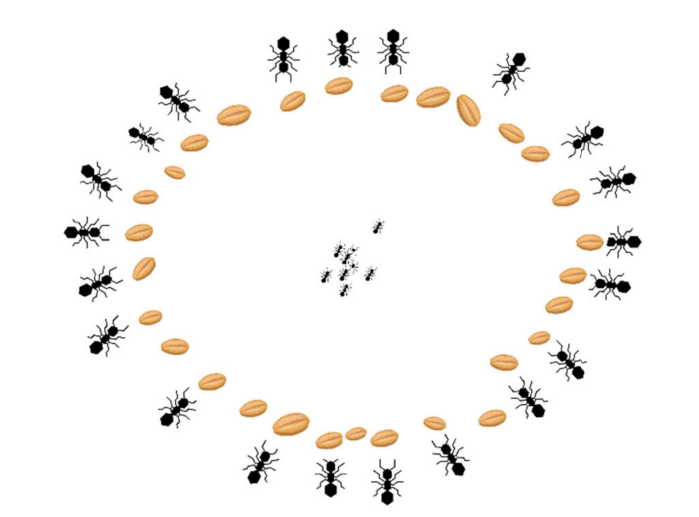

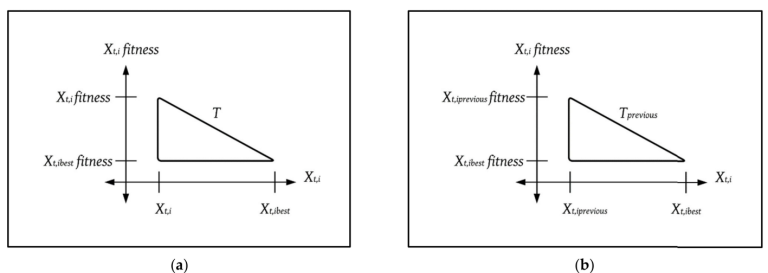

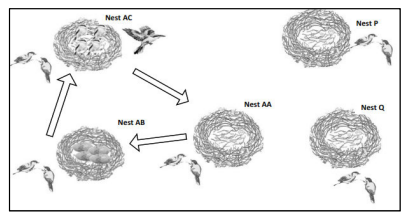

In this project, a novel swarm intelligent algorithm is proposed called ant nesting algorithm (ANA). The algorithm is inspired by Leptothorax ants and mimics the behavior of ants searching for positions to deposit grains while building a new nest. Although the algorithm is inspired by the swarming behavior of ants, it does not have any algorithmic similarity with the ant colony optimization (ACO) algorithm. It is worth mentioning that ANA is considered a continuous algorithm that updates the search agent position by adding the rate of change (e.g., step or velocity). ANA computes the rate of change differently as it uses previous, current solutions, fitness values during the optimization process to generate weights by utilizing the Pythagorean theorem. These weights drive the search agents during the exploration and exploitation phases.

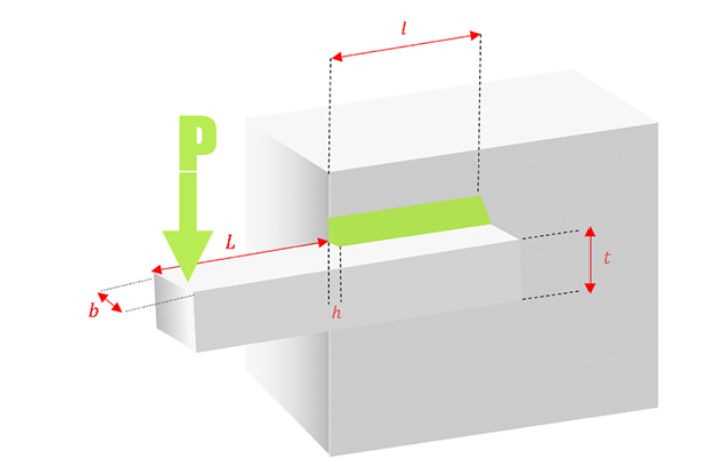

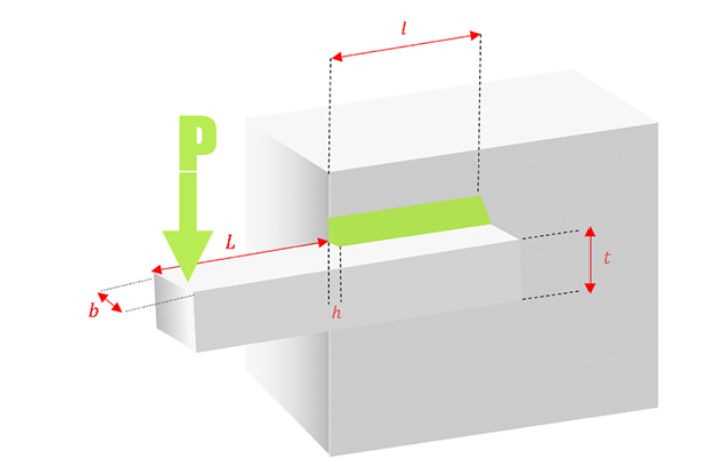

Optimisation algorithms are critical methods and tools that can improve engineering and science fields. However, researchers are confronting challenges in the subject due to a lack of a significant approach for comparisons and effective benchmarking. This requires human implementation of the algorithms, such as configuration and execution, which is time-consuming and prone to errors. This paper proposes OptimUse, a user-friendly Graphical User Interface (GUI) software built primarily to address the issues researchers experience in this sector. OptimUse includes both single-object and multi-objective metaheuristic optimisation algorithms that can operate seemingly while providing parameter adjustment and execution. Users of OptimUse do not need programming experience or coding abilities because the software's design allows them to select algorithms from dropdown lists and parameter tunings, allowing them to acquire the results of their desired algorithm without writing any code. Furthermore, it includes standard benchmark test functions as well as real-world functions, such as the beam problem, providing a large study field for researchers. OptimUse can also be extended further because it is an open-source platform that allows users to integrate new optimisation methods.

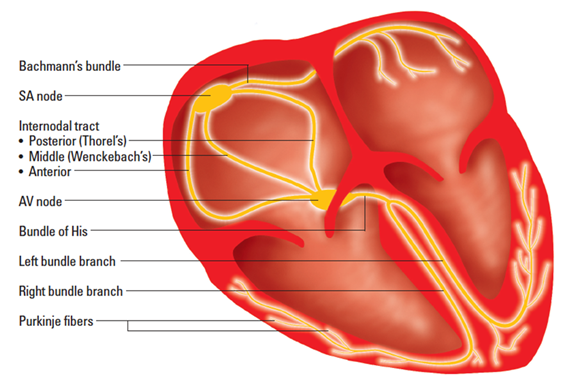

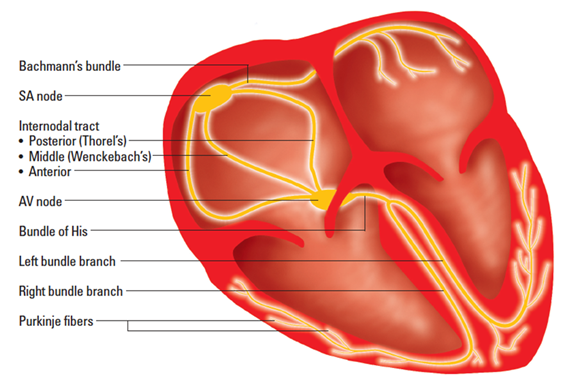

This work proposes a novel bio-inspired metaheuristic called the Artificial Cardiac Conduction System (ACCS) inspired by the human cardiac conduction system. The ACCS algorithm imitates the functional behavior of the human heart that generates and sends signals to the heart muscle, initiating it to contract. Four nodes in the myocardium layer participate in generating and controlling the heart rate: the sinoatrial, atrioventricular, bundle of His, and Purkinje fibers. The mechanism for controlling the heart rate through these four nodes is implemented.

The Shrike Optimization Algorithm (SHOA) is a swarm intelligence optimization algorithm. Many creatures, who live in groups and survive for the next generation, randomly search for food; they follow the best one in the swarm, a phenomenon known as swarm intelligence, while swarm-based algorithms mimic the behaviors of creatures, they struggle to find optimal solutions in multi-modal problem competitions. The swarming behaviors of shrike birds in nature serve as the main inspiration for the proposed algorithm. The shrike birds migrate from their territory to survive. However, the SHOA replicates the survival strategies of shrike birds to facilitate their living, adaptation, and breeding. Two parts of optimization exploration and exploitation are designed by modeling shrike breeding and searching for foods to feed nestlings until they get ready to fly and live independently. The SHOA benchmarked 19 well-known mathematical test functions, 10 from CEC-2019 and 12 from CEC-2022’s most recent test functions, for a total of 41 competitive mathematical test functions and four real world engineering problems with different conditions, both constrained and unconstrained. The statistical results obtained from the Wilcoxon ranking sum and Fridman test show that SHOA has a significant statistical superiority in handling the test benchmarks compared to competitor algorithms in multi-modal problems. The results for engineering optimization problems show the SHOA outperforms other nature-inspired algorithms in many cases

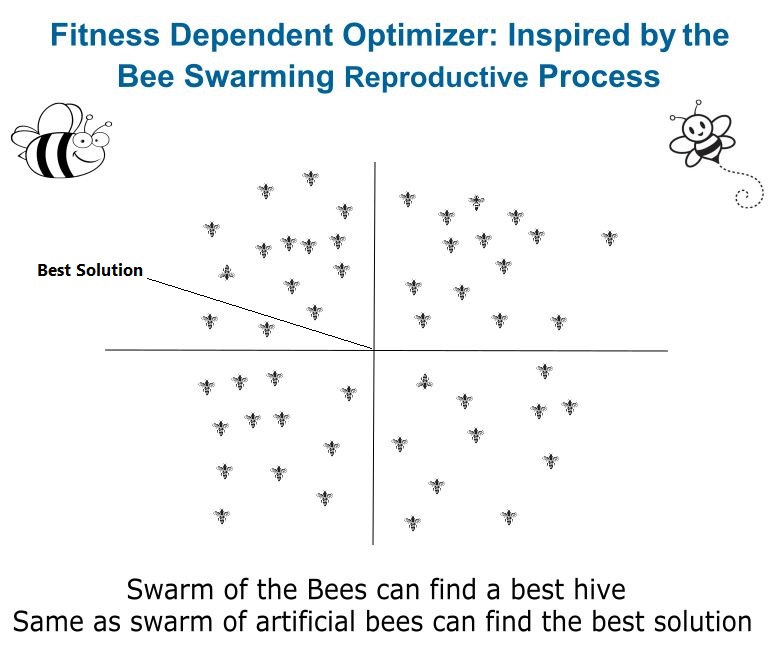

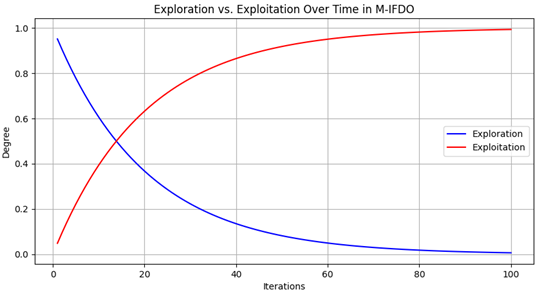

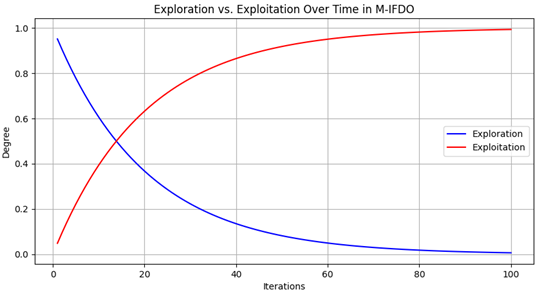

This study proposes a modified version of IFDO, called M-IFDO. The enhancement is conducted by updating the location of the scout bee to the IFDO to move the scout bees to achieve better performance and optimal solutions. More specifically, two parameters in IFDO, which are alignment and cohesion, are removed. Instead, the Lambda parameter is replaced in the place of alignment and cohesion. To verify the performance of the newly introduced algorithm, M-IFDO is tested on 19 basic benchmark functions, 10 IEEE Congress of Evolutionary Computation (CEC-C06 2019), and five real-world problems. M-IFDO is compared against five state-of-the-art algorithms: Improved Fitness Dependent Optimizer (IFDO), Improving Multi-Objective Differential Evolution algorithm (IMODE), Hybrid Sampling Evolution Strategy (HSES), Linear Success-History based Parameter Adaptation for Differential Evolution (LSHADE) and CMA-ES Integrated with an Occasional Restart Strategy and Increasing Population Size and An Iterative Local Search (NBIPOP-aCMAES). The verification criteria are based on how well the algorithm reaches convergence, memory usage, and statistical results. The results show that M-IFDO surpasses its competitors in several cases on the benchmark functions and five real-world problems.

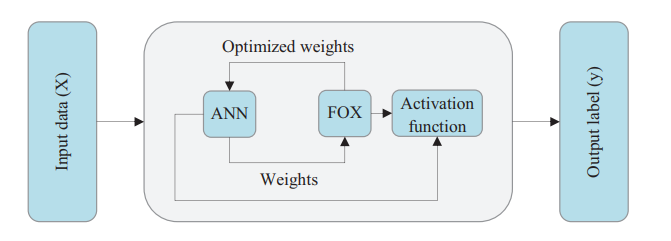

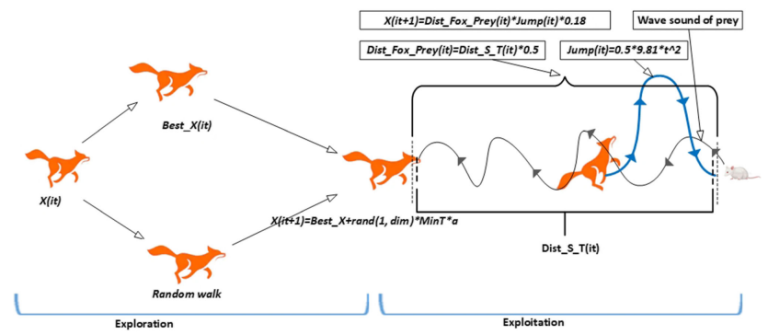

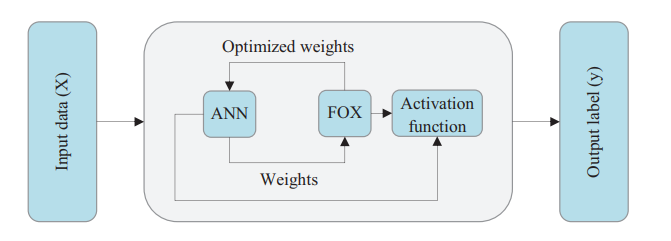

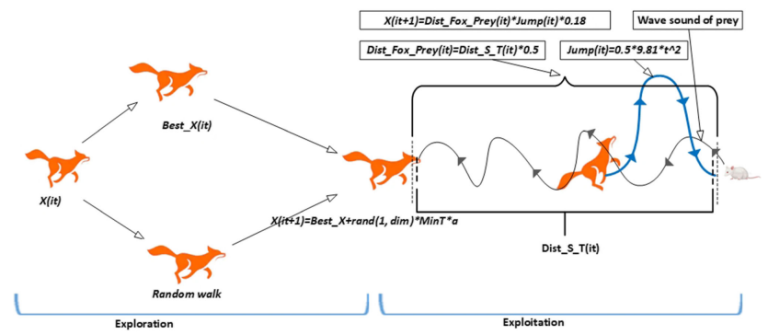

Fox Artificial Neural Network: FOXANN is a novel classification model that combines the recently developed Fox optimizer with ANN to solve ML problems. Fox optimizer replaces the backpropagation algorithm in ANN; optimizes synaptic weights; and achieves high classification accuracy with a minimum loss, improved model generalization, and interpretability. The performance of FOXANN is evaluated on three standard datasets: Iris Flower, Breast Cancer Wisconsin, and Wine. Moreover, the results show that FOXANN outperforms traditional ANN and logistic regression methods as well as other models proposed in the literature such as ABC-ANN,

ABC-MNN, CROANN, and PSO-DNN, achieving a higher accuracy of 0.9969 and a lower validation loss of 0.0028. These results demonstrate that FOXANN is more effective than traditional methods and other proposed models across standard datasets. Thus, FOXANN effectively addresses the challenges in ML algorithms and improves classification performance.

Artificial liver classifier: a new alternative to conventional machine learning models

The paper presents the Artificial Liver Classifier (ALC), a simple and fast biologically inspired model optimized with the Improved FOX algorithm. Tested on five benchmark datasets, ALC achieved up to 100% accuracy on Iris and 99.12% on Breast Cancer, outperforming several traditional classifiers. It also showed smaller generalization gaps and lower loss, highlighting the potential of biologically inspired methods for efficient machine learning.

This project presents the Multi-Objective Ant Nesting Algorithm (MOANA), a novel extension of the Ant Nesting Algorithm (ANA), specifically designed to address multi-objective optimization problems (MOPs). MOANA incorporates adaptive mechanisms, such as deposition weight parameters, to balance exploration and exploitation, while a polynomial mutation strategy ensures diverse and high-quality solutions. The algorithm is evaluated on standard benchmark datasets, including ZDT functions and the IEEE Congress on Evolutionary Computation (CEC) 2019 multi-modal benchmarks. Comparative analysis against state-of-the-art algorithms like MOPSO, MOFDO, MODA, and NSGA-III demonstrates MOANA's superior performance in terms of convergence speed and Pareto front coverage.

In this project, a novel swarm intelligent algorithm is proposed called ant nesting algorithm (ANA). The algorithm is inspired by Leptothorax ants and mimics the behavior of ants searching for positions to deposit grains while building a new nest. Although the algorithm is inspired by the swarming behavior of ants, it does not have any algorithmic similarity with the ant colony optimization (ACO) algorithm. It is worth mentioning that ANA is considered a continuous algorithm that updates the search agent position by adding the rate of change (e.g., step or velocity). ANA computes the rate of change differently as it uses previous, current solutions, fitness values during the optimization process to generate weights by utilizing the Pythagorean theorem. These weights drive the search agents during the exploration and exploitation phases.

Optimisation algorithms are critical methods and tools that can improve engineering and science fields. However, researchers are confronting challenges in the subject due to a lack of a significant approach for comparisons and effective benchmarking. This requires human implementation of the algorithms, such as configuration and execution, which is time-consuming and prone to errors. This paper proposes OptimUse, a user-friendly Graphical User Interface (GUI) software built primarily to address the issues researchers experience in this sector. OptimUse includes both single-object and multi-objective metaheuristic optimisation algorithms that can operate seemingly while providing parameter adjustment and execution. Users of OptimUse do not need programming experience or coding abilities because the software's design allows them to select algorithms from dropdown lists and parameter tunings, allowing them to acquire the results of their desired algorithm without writing any code. Furthermore, it includes standard benchmark test functions as well as real-world functions, such as the beam problem, providing a large study field for researchers. OptimUse can also be extended further because it is an open-source platform that allows users to integrate new optimisation methods.

This work proposes a novel bio-inspired metaheuristic called the Artificial Cardiac Conduction System (ACCS) inspired by the human cardiac conduction system. The ACCS algorithm imitates the functional behavior of the human heart that generates and sends signals to the heart muscle, initiating it to contract. Four nodes in the myocardium layer participate in generating and controlling the heart rate: the sinoatrial, atrioventricular, bundle of His, and Purkinje fibers. The mechanism for controlling the heart rate through these four nodes is implemented.

The Shrike Optimization Algorithm (SHOA) is a swarm intelligence optimization algorithm. Many creatures, who live in groups and survive for the next generation, randomly search for food; they follow the best one in the swarm, a phenomenon known as swarm intelligence, while swarm-based algorithms mimic the behaviors of creatures, they struggle to find optimal solutions in multi-modal problem competitions. The swarming behaviors of shrike birds in nature serve as the main inspiration for the proposed algorithm. The shrike birds migrate from their territory to survive. However, the SHOA replicates the survival strategies of shrike birds to facilitate their living, adaptation, and breeding. Two parts of optimization exploration and exploitation are designed by modeling shrike breeding and searching for foods to feed nestlings until they get ready to fly and live independently. The SHOA benchmarked 19 well-known mathematical test functions, 10 from CEC-2019 and 12 from CEC-2022’s most recent test functions, for a total of 41 competitive mathematical test functions and four real world engineering problems with different conditions, both constrained and unconstrained. The statistical results obtained from the Wilcoxon ranking sum and Fridman test show that SHOA has a significant statistical superiority in handling the test benchmarks compared to competitor algorithms in multi-modal problems. The results for engineering optimization problems show the SHOA outperforms other nature-inspired algorithms in many cases

This study proposes a modified version of IFDO, called M-IFDO. The enhancement is conducted by updating the location of the scout bee to the IFDO to move the scout bees to achieve better performance and optimal solutions. More specifically, two parameters in IFDO, which are alignment and cohesion, are removed. Instead, the Lambda parameter is replaced in the place of alignment and cohesion. To verify the performance of the newly introduced algorithm, M-IFDO is tested on 19 basic benchmark functions, 10 IEEE Congress of Evolutionary Computation (CEC-C06 2019), and five real-world problems. M-IFDO is compared against five state-of-the-art algorithms: Improved Fitness Dependent Optimizer (IFDO), Improving Multi-Objective Differential Evolution algorithm (IMODE), Hybrid Sampling Evolution Strategy (HSES), Linear Success-History based Parameter Adaptation for Differential Evolution (LSHADE) and CMA-ES Integrated with an Occasional Restart Strategy and Increasing Population Size and An Iterative Local Search (NBIPOP-aCMAES). The verification criteria are based on how well the algorithm reaches convergence, memory usage, and statistical results. The results show that M-IFDO surpasses its competitors in several cases on the benchmark functions and five real-world problems.

Fox Artificial Neural Network: FOXANN is a novel classification model that combines the recently developed Fox optimizer with ANN to solve ML problems. Fox optimizer replaces the backpropagation algorithm in ANN; optimizes synaptic weights; and achieves high classification accuracy with a minimum loss, improved model generalization, and interpretability. The performance of FOXANN is evaluated on three standard datasets: Iris Flower, Breast Cancer Wisconsin, and Wine. Moreover, the results show that FOXANN outperforms traditional ANN and logistic regression methods as well as other models proposed in the literature such as ABC-ANN,

ABC-MNN, CROANN, and PSO-DNN, achieving a higher accuracy of 0.9969 and a lower validation loss of 0.0028. These results demonstrate that FOXANN is more effective than traditional methods and other proposed models across standard datasets. Thus, FOXANN effectively addresses the challenges in ML algorithms and improves classification performance.